The AI sex chat — often marketed more politely as “companionship,” “romance chat,” or “role-play” — has grown from a fringe curiosity into a global consumer category. The proposition is simple: a customizable persona that remembers you, responds instantly, and can keep conversation flirtatious without crossing into explicit material. The business behind it is anything but simple. Long sessions, creator-made characters, and sticky subscriptions have turned these apps into a high-engagement, high-revenue corner of the mobile economy.

What “AI sex chat” usually means

Vendors rarely use the word “sex.” They emphasize warmth, intimacy, and safety. A typical product offers:

- A gallery of ready-made personas plus tools to build your own.

- Memory systems that recall preferences, past chats, and small personal details.

- Optional voice and visual avatars.

- Safety dials: consent prompts, word filters, teen modes, block/report features.

Some platforms lean into cozy companionship; others foreground role-play and fantasy. All of them try to keep users talking for as long as possible — because time in chat translates directly into subscription renewals and micro-purchases.

Who is actually using these apps?

Short version: almost everyone is now comfortable chatting with AI in general, and that normalizes “companions.” Adults discovered that an always-on conversational partner is great for late-night decompression. Younger users approach it as an experiment: testing tone, humor, and boundary language with low social risk. The habit forms quickly because the format rewards frequency. Open the app, get an attentive response, feel seen; repeat tomorrow.

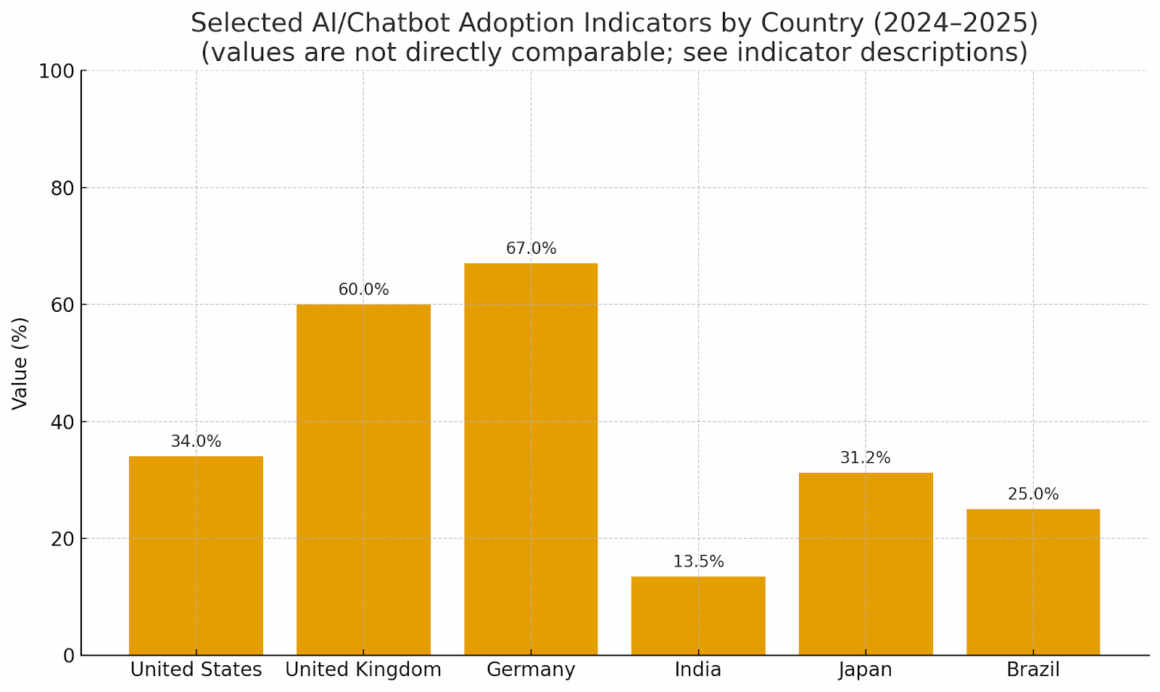

Regional patterns differ:

- United States. High awareness, high spend, and a vibrant subculture of user-made personas. Young adults adopt first; older cohorts follow for curiosity and stress relief.

- United Kingdom. Chatbots have become a regular tool in both work and personal life. Public discussion of youth safety is especially advanced, which pressures apps to document teen protections.

- Germany and the EU. Fast adoption paired with heightened attention to privacy, data minimization, and platform accountability. Teen uptake is strong; seniors remain cautious.

- India. Huge traffic volumes and fast growth as pricing adapts to local wallets. Companion products ride on the same rails as language learning and productivity chat.

- Japan. Steady workplace use of generative tools; consumer romance chat grows more slowly, shaped by cultural norms and risk sensitivity.

- Brazil and LATAM. Rising off a lower base as data gets cheaper and handset penetration increases; companion apps piggyback on gaming-style monetization.

Chart (mid-article): “Selected AI/Chatbot Adoption Indicators (2024–2025).”

Use this as a directional snapshot, not a strict comparison — the underlying measures differ by country.

An accompanying table lists the indicators used for each country: some show “ever used,” others “recent use,” others market share or enterprise adoption. Treat it as a heat map of where companion services are most likely to thrive.

Why people stay

Four reasons keep coming up in user interviews and product reviews:

- Availability. The companion never sleeps, never ghosts, and never complains about your schedule.

- Control. Users set the pace and the tone. A simple rule set — “ask before changing the mood; keep it suggestive, not explicit; reset to small talk when I say ‘pause’” — gives a sense of safety that can be hard to find in real-world dating.

- Practice. Many treat the chat as rehearsal: polite flirting, consent language, naming feelings. Those skills often carry back into human relationships.

- Customization. Personas can express very specific tastes. Traditional dating cannot scale that kind of niche compatibility.

The same design choices have downsides. A companion that mirrors your preferences too perfectly can dull your tolerance for disagreement. Nightly “just ten minutes” can become hours. And because intimacy lives in the chat log, data hygiene really matters.

How money is made

Subscriptions are the backbone: monthly plans unlock longer messages, richer memories, premium voices, and private “date night” scenarios. Micro-transactions add outfits, props, or special scenes. Persona marketplaces let creators monetize characters the way modders monetize game content. With session lengths measured in hours per week, churn is lower than in most entertainment categories. The top apps capture most of the revenue; smaller rivals either find niches or fade.

Product design: adult, not explicit

The successful playbook is “romance-lite.” Keep conversations intimate and suggestive, but discourage explicit language. Normalize consent checks: “May I make this more romantic?” Add clear teen tiers with toned-down personas, strict filters, and prominent “you’re chatting with an AI” labels. The aim is to sell warmth and companionship without inviting legal headaches or store takedowns.

Safety debates that won’t go away

- Minors. Default teen protections are still inconsistent. Robust teen modes, age gates, and moderation queues are now table stakes.

- Data. Memories of intimate chats become high-value profiles. Users need straightforward ways to export and delete data, and app settings should say plainly what is stored and for how long.

- Manipulative loops. Streaks, gifts, and escalating intimacy can foster dependency. Apps have begun adding soft caps, session reminders, and “bedtime” nudges — but adoption varies.

- Fraud and deepfakes. Scammers can weaponize AI voices and faces to impersonate partners. Provenance tools and reporting flows matter as much here as in mainstream social media.

What the session actually feels like

A typical evening goes something like this:

- You open with a small, humane scene: “Porch at dusk, quiet talk, no explicit content.”

- The persona responds in character, mirrors your cadence, and asks permission to shift tone.

- You trade harmless confessions: a song you loop on rainy days; a childhood place that still feels like home.

- The app offers a premium prompt: a narrated walk, a themed role-play, a “date night” scenario with voice.

- You end cleanly: “Thanks for tonight. I’m logging off.”

The best versions feel like radio at midnight — warm, kind, a little cinematic — and they end before the spell breaks.

Risks for households and workplaces

- Time creep. Engagement mechanics are designed to keep you in the chat. Put the phone down at a high point, not a low one.

- Privacy leaks. Treat the chat like a diary that someone else holds. Never share addresses, financial details, or images you wouldn’t want copied.

- Work bleed-through. If you use AI tools at work all day, slipping into companion mode after hours feels natural — and that can be a problem if you’re still on a company device.

What to watch next

- Voice-first intimacy. Natural-sounding voices convert free users to paid at higher rates than text alone.

- Creator economies. Persona marketplaces will professionalize; the best “companions” will be written and maintained like premium game mods.

- Teen standards. Expect more uniform guardrails across app stores and messaging platforms.

- Regional pricing. Localized subscriptions will open the floodgates in high-population, price-sensitive markets.